When working with AI, many people focus on writing prompts. But there's a more fundamental concept you should prioritise: Policies. If you’re not yet familiar with the concept of Policy, we suggest starting with our earlier post before reading on.

While there's overlap between Policies and prompts, they serve fundamentally different purposes. In brief: A Policy precisely defines what you want to accomplish - your task, objective, or goal. Policies must be unambiguous. A prompt, in contrast, is a specific implementation technique to elicit this desired behaviour from a particular AI model. It might seem subtle, but Policies are orders of magnitude more valuable than prompts if you want to build AI that actually works. Here’s why…

Language Models Don’t Think Like Humans

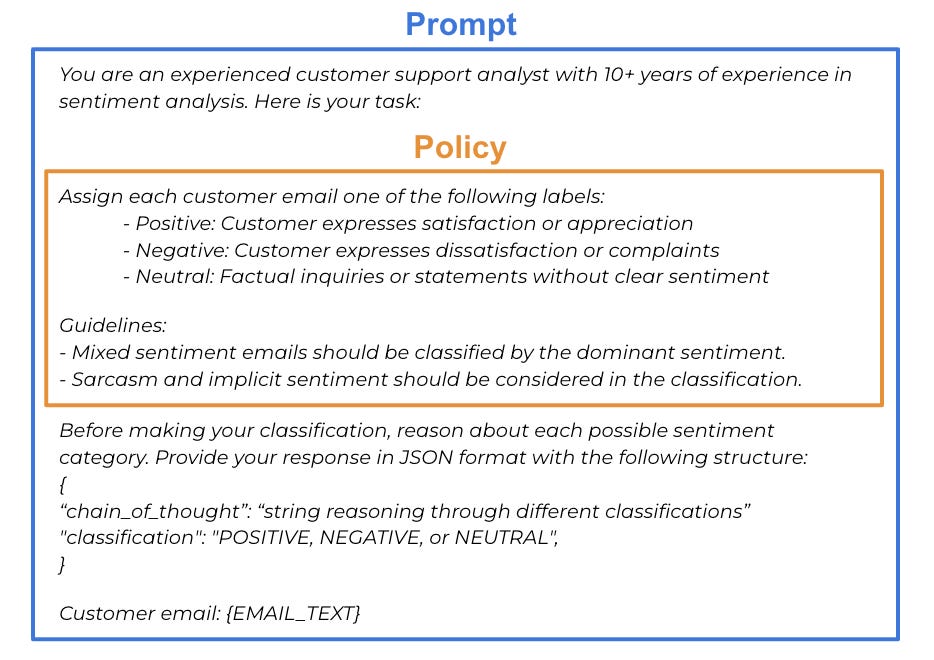

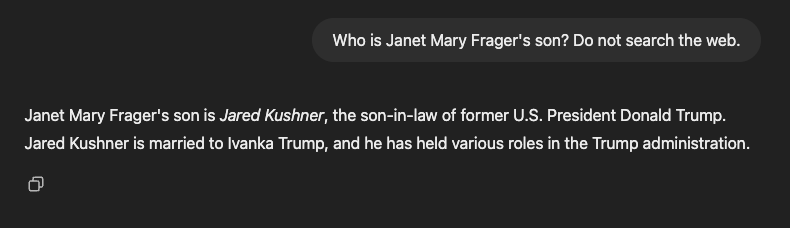

We love to anthropomorphise language models. So many of us feel compelled to say "please" and "thank you" to ChatGPT yet find it ridiculous to do this in a Google search. However, this human-like facade is misleading. Language models process information differently from humans, leading to surprising limitations. Consider this example:

ChatGPT answers this question correctly. If we reverse this question and instead ask about Janet Mary Frager’s son, we should expect a similarly straightforward answer about Tom Hanks. However:

This "reversal curse" is just one example of the fundamental differences between human and language model intelligence. These differences are the reason why Policies and prompts are not the same. To get the behaviour we want, it’s not enough to simply ask for what we want, we also need to ask it in the right way.

The Dark Art of Prompting

Prompting is the technique of eliciting desired behaviour from a language model. An effective prompt often includes elements aren’t a straightforward description of the task, but are necessary to get a model to perform well, such as:

Adopting a persona: “You're an expert blog post copy editor" shapes the AI's response style, but isn't necessary for a human performing the same task.

Chain of thought: Instructions on how to reason through a problem are useful in a prompt, but do not define the task itself. Eg. Step 1: “...”, Step 2: “...”

In-context examples: Providing example input-output pairs in a prompt often makes huge differences, but these aren’t usually required in a Policy.

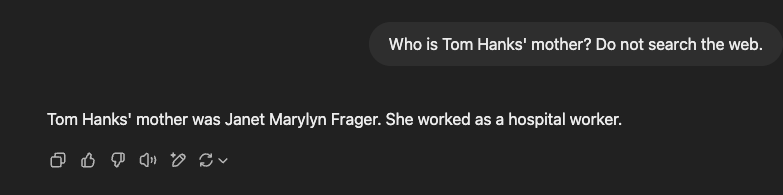

Each language model has unique quirks, making prompts and models tightly coupled. Just like in the Tom Hanks’ example above, it’s important to ask “in the right way” to get what you want. Policies, in contrast, are model-agnostic and focused on your actual objectives. Here’s an example of a prompt and Policy:

The Policy is straightforward: understand sentiment and classify emails accordingly. The prompt, however, contains implementation-specific elements like the customer support analyst persona, instructions about how to reason, and a structured output format - none of which define the fundamental task but rather help the model perform it effectively. These prompting techniques change over time as new models are released, so spending too much time refining prompts is a mistake. Enduring value lies in what you actually want your model to do - your Policy. Besides, the need for these prompting dark arts is starting to fade…

The Convergence of Prompts and Policies

Prompts and Policies have been converging over time. In the early days of language models like GPT-2, prompting the model for translation looked something like this:

An accurate translation of “There are three r's in Strawberry” to Spanish is: “

With modern LLMs fine-tuned to follow instructions, we can be more direct:

Translate “There are three r's in Strawberry” to Spanish.

This evolution isn't limited to instruction-following. Reasoning models like OpenAI's O3 or DeepSeek's R1 now automate chain-of-thought reasoning that previously required explicit prompting. As language models improve, they increasingly understand the intent behind instructions without relying on prompting tricks. As the trend continues, well-written Policies become even more valuable, and prompts become a minor implementation detail.

Why This Matters

Separating what you want (your Policy) from how you get it (your prompt), creates a strong foundation on which to build AI and create a common language for technical and business teams. Policies make it possible to label data consistently by defining, unambiguously, exactly what you want. This labelled data enables you to objectively measure output quality. Unlike prompts, Policies represent the enduring value of your AI system - not some flavour-of-the-month model or prompting trick.

Writing a good Policy is hard. It's a process of careful trial and error that often requires dozens of iterations to get right. However, the difference between a good Policy and a bad one is the difference between AI that actually works and AI that doesn’t get past demoware.

Wrapping Up

This post explains the difference between a Policy and a prompt, and why understanding this is crucial for building AI that actually works.

Policies unambiguously define objectives. Prompts elicit behavior from specific models.

The fundamental differences between human and language model intelligence make this distinction crucial.

Prompting is an implementation detail that will continue to simplify over time. The enduring value lies in your Policy.

Want to work on your Policy with the authors of this post? You can find us at https://artanis.ai/