Pre-reads for context

If you’re not yet confident with building AI using real-world data, start with our series How To Build AI That Actually Works. There are 4 x 5-minute posts and don’t require prior AI experience.

If you’re confident with AI, but never heard of Policy, that’s because we invented it! You should start with our post on how Policy is the missing core of the AI stack.

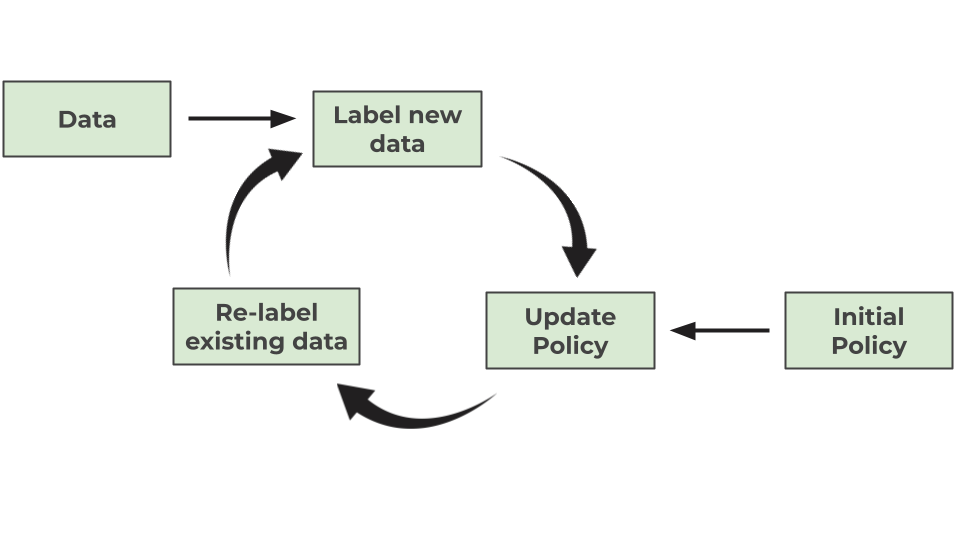

To recap: we argue that labelling a dataset must start with defining what you want from your model in unambiguous terms. We call this definition your Policy. However, this is a very simplified argument. The reality is it’s an iterative process between writing your Policy and labelling data. We call this process the Policy-Data Loop.

The Policy-Data Loop

Your initial Policy will be underspecified, and that’s ok. It can even be a single sentence about what you want your AI to do.

Grade the English essay. Give them 5 marks for spelling accurately.

You then start labelling data. As you label, you will probably notice your Policy is wrong in some way. For example:

<This is my whole essay, goodbye.>

Arguably this gets 5 out of 5 marks according to the original Policy. But this doesn’t feel realistic: would we really give top marks to a student who wrote a single sentence?! Probably not, so you update the Policy.

+ … They must use a wide range of vocabulary.

You relabel the data - this time giving the essay just 1 mark. You look at the next essay.

<This is my whole essay. Here’s a speling mistake, in the presence of diverse vocabulary. Next time I should write more carefully!>

Now you’re not sure what to do. They use a wider range of vocabulary, but they’ve now made a spelling mistake. Arguably this could get anywhere from 0 to 5 marks with the current Policy! So we need to keep improving the Policy, and relabelling data as we make changes. This process of iteratively updating our Policy, based on data, is the Policy-Data Loop. After a few iterations, we end with a well-written Policy:

Give them 5 marks if they:

Make zero spelling mistakes in the essay.Use a wide range of vocabulary. Wide range which means at least one adjective, adverb, noun and pronoun.

This Policy describes exactly what we want our model to do in unambiguous terms. We’re now well on our way to building AI that actually works.

If your Policy grows too long, you may need to break your AI problem into smaller problems. We explain why and how to break a problem down in How to Build AI That Actually Works.

How many times should you repeat the Policy-Data Loop?

First: an analogy with Product-Market Fit. When is your product good enough? Your product meets the needs of the market when you’ve iterated to the point where customers are clearly satisfied with it e.g. high retention and referral rates.

Now think of your Policy as the product and your data as the market. How much data should you label? You keep going through the Policy-Data Loop until you no longer need to update your Policy to fit new data. At this point, you have “Policy-Data Fit”.

The dataset can now be used to evaluate the accuracy of your AI system. Automatic evaluation is powerful, because you can now iterate quickly on the AI until the output is good enough for you to trust it. Reminder: the market decides what’s good enough, not your engineers! Once it’s trustworthy, you deploy and launch to users.

Policy as a living document

After you launch, it’s important to monitor the inputs and outputs of your AI so you can understand how it’s performing. It’s likely your AI won’t do exactly what you want in all cases. Users will interact in ways you hadn’t anticipated. When this happens, it’s time to re-enter the Policy-Data Loop, labelling the new data and updating your Policy to fit it.

At this point, having version control for both Policy and data is very important. When the Policy changes, you’ll need to know whether your data reflects the most recent Policy or a stale version. You do not want to get caught with your pants down because some of your data was labelled according to a stale Policy and you aren’t aware of it. This can lead to inconsistent labels, which is a disaster for evaluation.

Wrapping Up

This post explains how to operationalise Policy as part of your AI stack.

The first version of a Policy only needs to be enough to start labelling, because both Policy and data are an iterative process called the Policy-Data Loop.

You should repeat the Policy-Data Loop until your Policy fits the data, after which point you’re ready to build a model.

Finally, you should version control both your Policy and data to avoid inconsistently labelled data.

For help making this all work in practice, you can find us at https://artanis.ai/